Ralph Wiggum Technique Explained: Running AI Coding Agents

- 19 Feb 2026

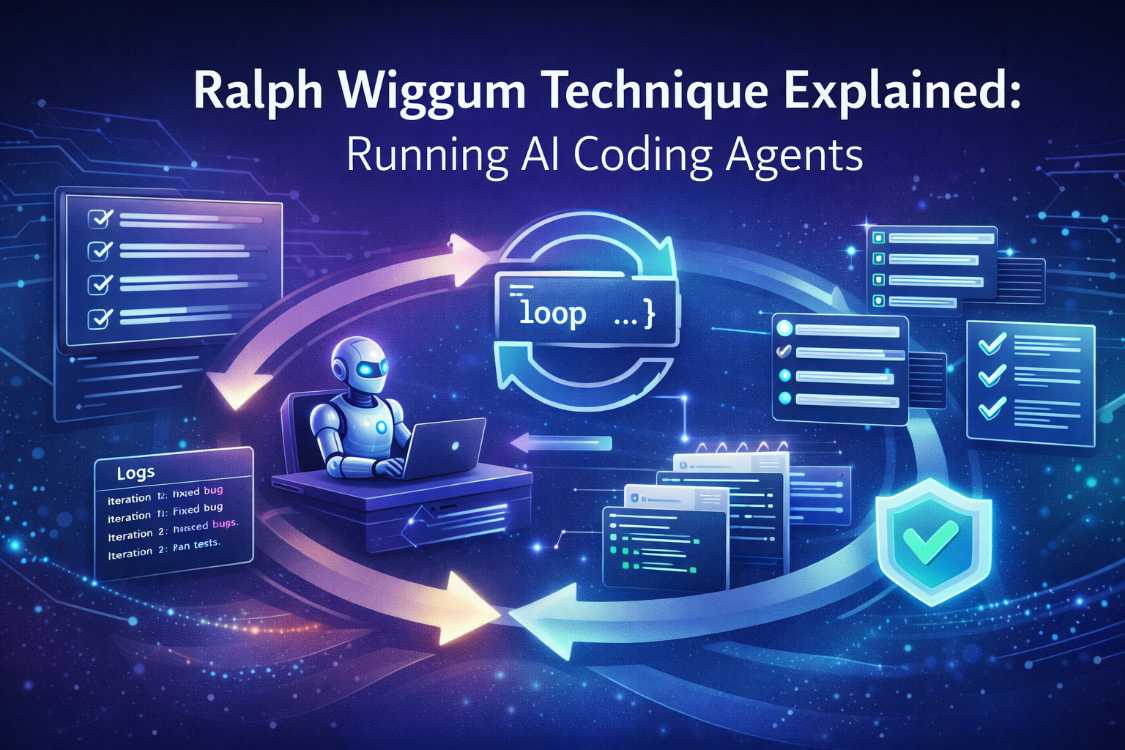

The Ralph Wiggum Technique Explained is a practical method for running AI coding agents in a loop so they keep working until objective completion criteria are met—rather than stopping when the model thinks it’s done. In its simplest form, it’s “Ralph is a bash loop”: re-run the agent, feed it the same goal plus repo context, and enforce gates like tests, lint, and acceptance checks. When implemented with guardrails (permissions, logging, review), Ralph-style loops can accelerate backlog work, refactors, and test coverage—safely.

Key Takeaways

- Ralph-style looping makes agents useful for multi-hour tasks by forcing iterative progress toward defined acceptance criteria.

- The loop is only as good as your definition of done—tests and checks must drive completion, not vibes.

- “Fresh context per iteration” often reduces compounding errors; persistent memory should be explicit (git, progress files).

- Plugins/stop-hooks can prevent premature exits and keep the agent focused on finishing.

- Safety matters: least privilege, sandboxed execution, and human approval for risky actions.

- Observability (iteration logs, diffs, test results) is what turns “autonomy” into manageable automation.

- The best ROI use-cases are constrained: backlog tickets, refactors, docs, tests, and migrations—not ambiguous product work.

What is the Ralph Wiggum technique?

The Ralph Wiggum technique is an iterative “agent loop” approach to AI-assisted software development: you repeatedly run an AI coding agent on the same task until it meets explicit completion criteria. The loop prevents the agent from exiting early, and progress persists through repo state (commits), structured task files, and logs. It’s designed for long-running, minimally supervised work—when guardrails are in place.

Ralph Wiggum Technique Explained: what it is, why it works, and what “autonomous AI loops” really mean

What: The technique is a loop that repeatedly re-invokes an AI coding agent until objective completion signals appear (tests pass, checklist done, promise tag found, etc.).

Why: AI agents often stop too early (“looks done”) or get lost in large prompts. A loop creates repeated opportunities to correct, re-evaluate, and continue.

How: Use a task spec + repo context + hard gates, and re-run the agent automatically until completion.

First occurrence link rule (primary keyword): Ralph Wiggum Technique Explained is best understood as a process, not a model.

The core idea: “don’t let the agent quit until the acceptance criteria are met”

Several public implementations describe the technique as forcing the agent to keep going until a completion condition is satisfied—often using a loop and explicit completion tokens or criteria.

Why this beats “one giant prompt”

One giant prompt creates two problems:

- Context overload: too many requirements at once.

- No checkpoints: the agent can’t reliably self-verify across many steps.

Ralph-style loops introduce repeatable checkpoints:

- run → test → fix → re-run → verify

That’s closer to how real engineering happens.

The Ralph Wiggum Technique origin story and the “Ralph is a bash loop” mental model

What: The “Ralph is a bash loop” phrase is used to emphasize simplicity: repeated agent execution until done.

Why: The simplicity is the point—no magic, just disciplined iteration.

How: Treat it like an automation pattern you can implement with your preferred agent runner.

First occurrence link rule (primary keyword): the Ralph Wiggum Technique is commonly described as a looping mechanism (“Ralph is a Bash loop”) rather than a new model.

Where the name comes from and how the loop evolved in practice

Multiple write-ups trace the technique’s naming and growth as developers began applying it to long-running coding tasks.

The core pattern stayed the same; the tooling got better: PRDs, progress files, structured task lists, and stronger safety controls.

What changed once developers started running agents for hours

When loops run for hours, two things become non-negotiable:

- Quality gates (tests, lint, typecheck).

- Safety boundaries (permissions, secrets, and “E-stop” intervention).

This aligns with broader “autonomous operations with guardrails” thinking: autonomy must be interruptible and observable.

The Ralph Wiggum Approach: a hands-on loop architecture for running AI coding agents

What: A loop architecture defines what goes in each iteration and what must come out.

Why: Without structure, autonomy becomes randomness.

How: Use a simple “spec → plan → implement → verify → log” cycle.

First occurrence link rule (primary keyword): the Ralph Wiggum Approach is essentially an iteration contract: keep going until verifiable completion.

Inputs: PRD, task list, repo state, and “definition of done”

Minimum recommended inputs:

- PRD / ticket: what success means

- Task list: break down into checkable items

- Repo context: current code, tests, config

- Definition of Done: pass/fail gates + acceptance checks

Practical observation: teams get the best results when they give agents narrow tasks with crisp outcomes, not “build the whole product.”

Outputs: commits, progress logs, and test results

A good loop produces auditable artifacts:

- commits with descriptive messages,

- a progress.md or progress.txt updated each iteration,

- test output summaries,

- a final checklist stating what was completed and what wasn’t.

Some implementations explicitly persist “memory” through git history and progress files.

Designing completion criteria: how to stop autonomous AI loops from shipping junk

What: Completion criteria are objective signals that the task is done.

Why: Agents will otherwise optimize for “plausible completion” rather than correctness.

How: Use layered gates: static checks + tests + scenario validation.

First occurrence link rule (primary keyword): autonomous AI loops are only safe when you define “done” in machine-checkable terms.

Acceptance tests, lint gates, and “must-pass” checks

Use a 3-layer gate:

- Static checks: lint, formatting, type checks

- Unit/integration tests: run relevant suites

- Acceptance checks: endpoints respond, pages render, critical flows pass

If you don’t have tests, your “done” criteria becomes subjective—loops will wander.

The simplest rubric that works across teams

A practical rubric to paste into tickets:

- Implements requirements in PRD

- Adds/updates tests

- All checks pass (lint/type/test)

- No secrets committed

- Release notes / changelog entry

- “Known limitations” documented

Tooling patterns: AI Coding With Ralph Wiggum using plugins, CLIs, and stop-hooks

What: Tooling is how the loop is enforced—especially preventing the agent from “exiting early.”

Why: Most agents have a natural “I’m done” behavior; Ralph overrides it until criteria are met.

How: Use stop-hooks, completion tokens, and a loop runner around your agent CLI.

First occurrence link rule (secondary keyword): AI Coding With Ralph Wiggum is often implemented by repeatedly re-invoking a coding tool in a controlled loop.

What the stop-hook mechanism does and why it matters

One described implementation uses a stop-hook to intercept the agent’s exit and re-feed the prompt unless a completion promise token is present.

This matters because it turns “agent confidence” into “agent compliance” with explicit completion signals.

When to prefer fresh-context loops vs continuous sessions

Two patterns:

- Fresh context per iteration: reduces compounding confusion; memory is external (git, progress file).

- Continuous sessions: can be faster for short tasks but risk context drift and hallucinated assumptions.

Rule of thumb: long tasks benefit from fresh iterations plus explicit artifacts.

Safety & governance: permissions, secrets, and guardrails for autonomous agents

What: Safety controls limit damage and prevent data leakage.

Why: Agents can take actions that violate policy if given broad access.

How: Apply least privilege, sandbox execution, and human approvals.

RBAC, sandboxing, and least-privilege tool access

Security best practices for agents often emphasize role-based access control and limiting scope.

Practical controls:

- separate “read-only” vs “write” modes,

- restrict network access in CI sandboxes,

- rotate credentials and keep secrets out of logs.

Prompt injection, supply chain risks, and how to mitigate

Risks discussed in agentic security guidance include prompt injection and expanded vulnerabilities when agents interact with systems.

Mitigations:

- sanitize tool inputs,

- strict allow-lists for tool actions,

- signed dependencies, lockfiles, and SBOM checks,

- manual approval for dependency upgrades.

Common mistake: letting the agent “fix build” by upgrading random dependencies. That’s how supply chain risk sneaks in.

Observability for agent loops: logs, traces, and “why did it do that?” debugging

What: Observability is how you understand and control agent behavior over time.

Why: Without logs, long-running loops create blind spots and low trust.

How: Log every iteration with diffs, test results, and decision notes.

What to log per iteration

Minimum per-iteration log:

- iteration number + timestamp,

- files changed,

- commands run,

- test outcomes,

- summary of decisions (“why I changed X”).

This aligns with the idea that autonomous code generation still needs human oversight and clear acceptance processes.

Human-in-the-loop review workflows

Recommended review checkpoints:

- after architecture decisions,

- before new dependencies,

- before database migrations,

- before production config changes.

Practical observation: teams that treat the agent like a junior dev (PR reviews, code owners, CI gates) get better outcomes than teams treating it like an oracle.

Best use-cases and anti-patterns: when Ralph-style loops deliver ROI

What: Ralph loops excel at constrained, checkable tasks.

Why: Loops magnify both productivity and mistakes—so pick use-cases where mistakes are easily caught.

How: Use a “constraints first” filter.

Great fits: refactors, backlog cleanup, test writing, docs

High ROI tasks:

- writing unit tests for existing modules,

- refactoring with clear success criteria,

- migrating repetitive code patterns,

- documentation updates tied to code.

Bad fits: ambiguous product decisions and unsafe domains

Avoid Ralph loops for:

- unclear requirements,

- tasks with no tests and no acceptance criteria,

- high-stakes changes without review (payments, auth, compliance).

This is consistent with broader warnings that more capable agents introduce enhanced risks without governance.

The Ralph Wiggum plugin ecosystem and what to look for in implementations

What: “Ralph Wiggum” appears across articles, repos, and event talks as a pattern and tooling ecosystem.

Why: Many developers want long-running agent loops; implementations vary widely in safety and quality.

How: Evaluate on four dimensions: loop control, context strategy, quality gates, and safety.

First occurrence link rule (secondary keyword): The Ralph Wiggum plugin conceptually refers to implementations that keep an AI coding agent looping until completion criteria are met (often via hook/loop control).

How to evaluate loop agents

Use this checklist:

- Loop control: explicit completion token, max iterations, safe stop

- Context strategy: fresh runs vs persistent sessions; external memory artifacts

- Quality gates: tests/lint/typechecks required for completion

- Safety: RBAC, sandboxing, audit logs, approval workflow

Common pitfalls that make loops worse than manual work

- no tests → loop “finishes” wrong work,

- broad permissions → risky changes,

- no logs → nobody trusts results,

- too-large tasks → agent churn.

Why RAASIS TECHNOLOGY + Next Steps checklist for running AI coding agents in production

Why RAASIS TECHNOLOGY

RAASIS TECHNOLOGY helps teams worldwide implement Ralph-style loops responsibly by combining:

- workflow design (PRDs, task decomposition, acceptance criteria),

- engineering enablement (CI gates, repo standards, observability),

- security guardrails (permissions, sandboxing, auditability),

- and rollout management (pilot → production).

30/60/90-day rollout plan

30 days (Pilot safely):

- choose 1–2 constrained use-cases (tests/refactor/docs),

- define completion rubrics,

- set up CI gates + iteration logs.

60 days (Scale):

- expand to more repos,

- add code-owner reviews and approval rules,

- build dashboards for loop performance (time-to-done, failure rates).

90 days (Operationalize):

- add security hardening, rotation, and auditing,

- formalize runbooks and “E-stop” procedures,

- standardize templates for PRDs and task lists.

Next Steps checklist

- Pick a task with crisp pass/fail tests

- Write a PRD + checklist definition of done

- Enforce CI gates (lint/type/test)

- Run loop with max-iteration safety

- Require PR review before merge

- Add logs + artifact capture per iteration

- Expand gradually based on measured reliability

If you want to run AI coding agents for hours—not minutes—without sacrificing safety, code quality, or governance, partner with RAASIS TECHNOLOGY to design and implement a production-ready Ralph-style agent loop system.

Start here: https://raasis.com

| Component | What it does | Why it matters | Practical default | Common mistake | “Done” signal |

| PRD + task list | Defines scope + steps | Prevents drift | 5–15 bullet tasks | Vague goals | Checklist complete |

| Completion criteria | Defines “stop” | Stops junk shipping | Tests + lint + token | “Feels done” | Gates pass |

| Loop runner | Re-invokes agent | Enables multi-hour work | Max iterations + timeout | Infinite loops | Completion token |

| Context strategy | Controls memory | Reduces compounding errors | Fresh-run + git memory | Overloaded context | Stable progress |

| Safety controls | Limits damage | Prevents leaks/risk | RBAC + sandbox | Broad credentials | Audit logs clean |

| Observability | Explains behavior | Builds trust + speeds debug | Iteration logs + diffs | No artifacts | Clear traceability |

FAQs

1) Is the Ralph Wiggum technique a specific tool or a general pattern?

It’s primarily a pattern: repeatedly run an AI coding agent in a loop until objective completion criteria are met. Different tools implement it differently—some as a shell loop, some as a “loop agent,” and some via plugins/hooks that prevent premature exit. The key is not the brand of tool, but the discipline: defined acceptance criteria, guardrails, and auditable artifacts.

2) What’s the biggest reason Ralph-style loops fail?

Weak completion criteria. If you can’t define “done” with tests, lint/type checks, and a checklist, the agent will optimize for plausible completion instead of correctness. The loop then becomes a “make changes until it looks done” machine. Start by writing acceptance checks first, even if they’re minimal—then let the loop work toward them.

3) Should I use fresh-context loops or a persistent session?

For longer tasks, fresh-context iterations often work better because they reduce compounding confusion and force progress to persist through explicit artifacts (commits, progress files). Some loop implementations explicitly persist memory via git and progress files rather than relying on a single growing conversation.

For short tasks, persistent sessions can be faster—just keep scope tight.

4) How do I prevent an autonomous loop from making risky changes?

Use least privilege and approvals. Restrict credentials, sandbox execution, and require PR review for merges. For high-risk actions (dependency upgrades, auth changes, infra), require explicit human approval. Agentic security guidance frequently emphasizes access control and monitoring as core safeguards for autonomous systems.

5) What tasks are best for running AI coding agents for hours?

Constrained tasks with measurable outcomes: writing tests, refactoring modules, migrating repetitive patterns, fixing lint/type errors, improving docs, and clearing backlog issues with clear acceptance criteria. Avoid ambiguous product work or changes with high legal/security impact unless your gates and reviews are strong.

6) What should I log to make loop behavior auditable?

Log each iteration’s diffs, commands run, test outputs, and a short “decision summary.” Capture artifacts like screenshots for UI changes and include a final report mapping completed checklist items to commits. This style of auditability supports the broader best practice of keeping humans accountable for acceptance and oversight in autonomous coding workflows.

7) Is the Ralph Wiggum technique only for coding?

No. The same loop idea can be applied to other repeatable knowledge tasks (docs, runbooks, support macros), but code is where objective gates (tests, builds) make it most reliable. The moment you lose objective checks, the loop becomes harder to trust—so you’ll need stronger human review and more explicit rubrics.

Implement production-grade AI coding agent loops with guardrails, logging, and quality gates. Partner with RAASIS TECHNOLOGY: https://raasis.com